The multiple choice format is perhaps the most familiar test format to many of us. It is also commonly referred to as an objective test as there is seen to be “objectivity” in grading the test. In this blog, we will examine the multiple choice format with respect to its structure, use, and construction.

The structure of the multiple choice format test

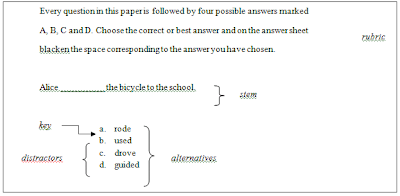

Multiple choice items consist of a stem and a set of options; the stem, which is the beginning part of the item that presents the item as a problem to be solved, a question asked of the respondent, or an incomplete statement to be completed, as well as other relevant information and the options, which are the possible answers that the examiner can choose from, with the correct answer called the key and the incorrect answers known as distractors. These components are illustrated in the following diagram:

Figure 1: The structure of the multiple choice questions

In the diagram above, the major components of the multiple choice format are clearly laid out. It should be noted that the number of alternatives in a multiple choice item usually ranges between three to five.

Use of the multiple choice format

There are a number of situations in which a multiple choice format test may be useful and appropriate:-

- When there is a large number of students taking the test

- When you wish to reuse the questions in the test

- When you have to provide the grades quickly

- When highly reliable test scores must be obtained as efficiently as possible

- When impartiality of evaluation, fairness and freedom from possible test scoring influences such as fatigue are essential

- When you are more confident of your ability to construct valid objective test items clearly than of your ability to judge essay test answers fairly

- When you want specific information especially for diagnostic feedback

Advantages

- The ability to create a test item bank

- Quick grading

- High reliability

- Objective grading

- Wide coverage of content

- Precision in providing information regarding specific skills and abilities

Constructing the multiple choice item

There are many issues that need to be considered in constructing MCQ test including how we select distracters in the item as well as how we can construct good test items:-

i) To select good distractors, there are a number of sources from which we can obtain reasonable distractors which are:

o Students previous incorrect responses

o Incorrect form, meaning, style or register

o Translations from the native language

o Variations of the key

o Contextual relevance

Constructing good test items

There are two issues in constructing good test items:-

a) Unintentional cues in the item are as follows:

i) Inconsistent length of alternatives

The way this item is written provides undue attention to the last alternative. As we are interested in measuring the student’s true language ability comprehension, in this case – it is unfair to draw so much of the students’ attention to a single alternative as in this example. If indeed any of the students select this alternative, it will be difficult to determine whether this was because he was unduly attracted to its obviously different length or whether he or she genuinely believes it to be the answer. It is bad enough that option D does this but it is even worse if it is the correct answer. If this is the case, then we will not be able to tell whether the selection of this alternative was because the student actually knew the answer or because he was at a total loss and chose the alternative simply because it stood out.

ii) Inconsistent categories of alternatives

Look at the following list of distractors and consider what cue is provided to the test taker.

In the list of distractors above, all the options except D. talkative are verbs. This draws undue attention to option D and increases the likelihood that it ill be selected regardless of whether or not it is the key.

iii) Convergence cues

The cue that is provided in the following item is a little less obvious and may occur more often without detection. The diagram below consists of the alternatives however, excluding the stem to better illustrate how it may work.

Without the stem, many are predicted to select A. tea because the two options tea and tee sound alike. Between two options, tea would probably be selected instead of tee because all the options are about a gathering of some sort where food is served. Therefore, these cues seem to converge on the option A. tea as the most likely answer.

iv) Nonsense distractors

Unless the students are actually experiencing problems in the language and the teacher intentionally inserts nonsense words into the item as a distrator in order to determine whether or not the student has such problems, the nonsense distractor cue should be avoided. The diagram below shows the possible alternatives of nonsense distractors.

One of the options above which is option B, does not exist in the English language and should be immediately deleted from the list of alternatives.

v) Common knowledge response

Another problem with select type test formats is that the student may select an answer based on common knowledge rather than the skill that we intend to assess. For example, let us consider the following test item:

While it is true that the question may have come from the reading passage, the answer is obvious even to those who have not read and understood the passage.

b) Reaction to the item

The test context can be quite taxing and anxiety causing. Test writers should avoid adding to this anxiety. Instead, they should consciously attempt to help students by reducing anxiety, time wastage and all other unnecessary reactions in the test. Some of these problems in writing test items related to this issue are discussed in this section. They include problems such as redundancy, trick questions, and improper sequencing of alternatives.

i) Redundancy

Some items are written in such way that the students need to read unnecessary information. In a test where time is often a premium, we should avoid such items. The following is an example of a test which includes redundant language.

Each alternatives begins with the phrase because he. It would have been much more helpful to the students if the phrase had been included in the stem so they would not have to spend time reading it over and over again.

ii) Trick questions

In the following example, the item is written in such a way that the student needs to read it over in order to understand what it actually requires.

The use of multiple negatives in the stem – not appropriate, not to be absent – make the stem somewhat confusing. Since our intention in a test is not to confuse the student but rather to measure his ability, this sort of item should be avoided.

iii) Improper sequencing

Disorganised sequencing of alternatives also contribute to unnecessary additional cognitive load for students. if the alternatives are days in a week or months in a year, they should be arranged in chronological order. Similarly, for testing reading comprehension, the alternatives that are taken from the passage should be arranged according to the order that they appear in the passage.

Criticism of the MCQ format

Hughes (1989) lists several other problem with the MCQ test format which are listed as follows:

- The technique test only recognition knowledge and recall of facts

- Guessing may have a considerable but unknowable effect on test scores

- The technique severely restricts what can be tested to only lower order skills.

- It is very difficult to write successful items, due especially to the difficulty of finding good distractors.

- . Backwash can be harmful – for both teaching and learning as preparing for a multiple choice format test is not reflective of good language teaching and learning practice.

- . Cheating may be facilitated

- . It places a high degree of dependence on the student’s reading ability and instructor’s writing ability.

- . It is time consuming to construct.

Hughes (1989:3) goes on to say that “good multiple choice items are notoriously difficult to write. A great deal of time and effort has to go into their construction. Too many multiple choice tests are written where such care and attention is not given (and indeed may not be possible). The result is a set of poor items that cannot possibly provide accurate measurements”

No comments:

Post a Comment